The Definitive Guide to Implementing Data Governance

I spent over 3 months finding and studying the best data governance resources, and reading up the implementation stories and case studies found on popular data management forums, slack communities, and even Reddit and LinkedIn.

And one thing was very clear:

Most "how to" data governance resources fail at the implementation stage.

Why?

Because they were built for a different era. Traditional frameworks like DAMA-DMBOK and DGI's Data Governance Framework suffer from critical limitations.

They emerged when data lived in centralized data warehouses, when regulatory pressures were the primary drivers, and when defensive concerns trumped value creation.

Today's data ecosystem demands something different.

This is why if you've tried implementing data governance before, you've likely faced these challenges:

- Resistance from teams who see governance as bureaucracy rather than enablement

- Difficulty measuring and demonstrating tangible value

- Frameworks that collapse under real-world complexity

- Technology that promises solutions but creates new problems

This guide takes a practical approach.

If you want to master data governance concepts and start implementing it in your organization, this resource is for you.

Building on our previous discussions about The Rise of Modern Data Governance and the offensive-defensive governance forces, we're now moving from theory to practice!

Let’s start with the bottom line, upfront.

Executive Summary: Implementing Modern Data Governance

This guide provides a practical framework for implementing data governance that creates business value while managing risk. If you're looking to build effective governance in your organization, here are the key principles to guide your journey:

Core Principles of Modern Data Governance:

- Balance value creation (offensive) with risk management (defensive)

- Align governance directly with business objectives and product goals

- Start small with high-value domains, then scale methodically

- Build governance into workflows rather than imposing it as a separate process

- Measure success through business outcomes, not governance activities

Getting Started - Your First 90 Days:

- First 30 days: Assess your current state, identify 1-2 priority domains, establish initial roles

- Days 31-60: Implement basic quality monitoring, enhance discovery capabilities, develop essential policies

- Days 61-90: Measure improvements, expand to additional domains, refine based on feedback

The Framework at a Glance:

Our framework consists of three interconnected layers that build upon your existing technology:

- Foundation: Strategic alignment with business objectives and product vision

- Offensive Governance: Value creation, data democratization, and operational excellence

- Defensive Governance: Compliance, security, and risk management

Throughout this guide, you'll learn how to customize this framework for your specific organizational context and maturity level.

Remember: effective governance isn't built overnight! It's an evolving capability that grows with your organization.

How to Use This Guide

This guide is designed to be useful whether you're just learning about data governance or actively implementing it in your organization. Here's how to navigate this content and find the most relevant starting points for your specific needs.

For Different Reader Journeys

If you're exploring data governance concepts:

- Start with the sections "Executive Summary" and "The Modern Data Governance Framework: An Overview"

- Focus on understanding the balance between offensive and defensive governance. The Part 2 of this series might be particular helpful: Balancing a Data Governance Strategy: The Defensive-Offensive Framework

If you're implementing governance now:

- Use the Data Governance Implementation Navigator below to identify your starting point

If you're enhancing existing governance:

- Focus on balancing offensive and defensive capabilities. Use the Data Governance Implementation Navigator below to identify a starting point

- Read the "Building the Tech Foundation: Choosing the Right Toolkit for Governance" section to evaluate your current tools

🌟 Data Governance Implementation Navigator: Start Here 🌟

Use this decision tree to identify where to focus your governance efforts first:

Step 1: What's your primary motivation?

- Regulatory pressure → Start with "Value Protection: Managing Risks and Enforcing Regulatory Compliance"

- Business value creation → Start with "Value Creation: Offensive Governance Tactics"

- Data literacy and democratization → Start with "Democratization: Making Data Work for Everyone"

- Optimizing governance operations → Start with "Operational Excellence: Optimizing Regular Governance Operations"

- Building data foundation → Start with “Building Your Foundation: Aligning Governance with Business Strategy"

Step 2: What's your organization's data maturity?

- Early stage (undefined data practices, limited tooling) → Focus on basic documentation, core quality rules, and essential access controls

- Growing (some practices established, inconsistent application) → Implement domain ownership, standard quality metrics, and self-service discovery

- Mature (established practices, seeking optimization) → Focus on integration across domains, advanced quality automation, and enhanced democratization

Step 3: What resources do you have available?

- Limited (part-time roles, minimal budget) → Begin with high-value quick wins that require minimal investment

- Moderate (dedicated roles, some budget) → Implement a balanced approach across offensive and defensive capabilities

- Substantial (dedicated team, significant budget) → Build comprehensive capabilities with advanced tooling

Step 4: Which domain should you tackle first? Choose a domain that is:

- High-value to the business

- Experiencing painful data issues

- Has engaged stakeholders

- Manageable scope

Common domains to start with include customer data, product data, or financial data, depending on your specific business priorities.

Moving Beyond Traditional Frameworks: What Actually Works

Traditional frameworks like DAMA-DMBOK, DCAM, and DGI's Data Governance Framework suffer from critical limitations:

- Rigid processes with centralization bias: They prescribe fixed processes instead of adaptive approaches. They assume centralized control when today's reality is distributed data ownership

- Compliance-first mentality: They prioritize risk management over value creation

- Technology lag: They don't account for modern data stack capabilities. They focus on structured data when most organizations now manage complex, multi-modal data (think data lakes and lake houses)

How can you tell if a framework will work for your context?

Ask yourself:

"Does this framework acknowledge the reality of how my organization actually works?"

Evaluation criteria for selecting the right approach

When evaluating governance frameworks, look for these qualities:

- Business alignment: Connects governance activities to your specific business objectives

- Balance: Addresses both offensive (value-creating) and defensive (value-protecting, risk-mitigating) needs

- Adaptability: Allows customization to fit your organizational structure and culture. It should offer different implementation paths based on your current capabilities. Modern data governance must be adaptive by design.

The most effective frameworks don't prescribe specific solutions but provide decision frameworks that help you make the right choices for your context.

Truth?

Data governance is never "done." It's an ongoing effort that evolves with your organization.

The Modern Data Governance Framework: An Overview

Establishing a clear framework early on is critical. It clarifies what data governance is and what it is not, helping to avoid confusion, set expectations, and drive adoption.

The framework we’re about to explore addresses both the defensive aspects of governance (compliance, security, risk management) and the offensive elements (business value creation, data democratization, operational excellence) that create tangible business value.

The framework is simple. At its core,

- Defensive Data Governance protects business value.

- Offensive Data Governance drives business value.

The framework consists of three interconnected layers:

- The Foundation

- Strategic Governance Inputs: The foundation that aligns governance with business goals

- Technology Toolkit: The framework sits atop a technology layer that provides the tools and infrastructure to implement the required capabilities effectively.

- Offensive Data Governance

- Business Value Creation:Enables trust and usage of data

- Data Democratization: Makes data accessible to all appropriate stakeholders

- Operational Excellence: Optimizes day-to-day practices that sustain governance

- Defensive Data Governance

- Compliance: Monitors the regulatory landscape and ensure meeting compliance standards.

- Security: The foundation of protection - implementing controls, managing access, and safeguarding data throughout its lifecycle. While compliance tells you what to protect, security determines how to protect it.

- Risk Management: Beyond immediate security concerns, this involves anticipating threats, managing vulnerabilities, and maintaining data integrity across the organization.

Each layer contains specific capabilities and activities that organizations develop according to their maturity level and needs.

The framework deliberately incorporates both offensive and defensive forces, building on the balance we explored in depth in our previous guide on the offensive-defensive framework. For specific strategies on calibrating this balance for your organization's unique context, refer back to that resource.

Feeling Overwhelmed? You're Closer Than You Think!

Most organizations already have foundational elements of governance in place, even if they don't call it "data governance." Look for these existing capabilities you can build upon:

- Documentation: Product specs, API documentation, and data dictionaries

- Quality checks: Validation rules in applications, data pipeline tests

- Access controls: User permissions, role-based security

- Data ownership: Tribal knowledge of who manages key systems

Instead of building from scratch, inventory these existing capabilities and formalize them within your governance framework. This approach can accelerate implementation and can increase adoption by building on familiar practices.

In the following sections, we'll explore each layer of the framework in detail, with practical guidance on implementation.

Building Your Foundation: Aligning Governance with Business Strategy

Effective governance doesn't start with policies or tools - it starts with strategy.

The foundational layer of the framework ensures your governance efforts address the right problems and delivers the right value for your specific context.

Step 1: Aligning with company mission and business objectives

Generic governance programs fail. Full stop.

Your governance approach must explicitly connect to your organization's strategic priorities:

- What business outcomes will improved data governance enable?

- Which strategic initiatives depend on better data quality or accessibility?

- What competitive advantages could stronger governance create?

- How will governance support your organization's mission?

Document these connections explicitly. They become your most powerful tool for securing buy-in and resources.

Every organization needs to strike a balance between defensive (risk-focused) and offensive (value-creation) governance capabilities based on their unique context:

- Growth-focused companies typically need stronger offensive capabilities to support rapid innovation and market expansion

- Companies in crisis or recovery (such as post-breach) need to emphasize defensive measures

- Regulated industries like healthcare and finance require robust defensive controls to meet compliance requirements

- Highly competitive markets push organizations toward offensive capabilities to maintain market position

For example:

- A healthcare provider might align governance with patient outcomes and care coordination

- A retailer might focus on customer experience and supply chain optimization

- A financial services firm might emphasize risk management and customer trust

Ask yourself:

- What's your primary goal? Growth demands offensive focus, while risk mitigation requires defensive strength

- What's your time horizon? Short-term compliance needs might require immediate defensive action

- What resources do you have? Limited resources might force you to prioritize one force initially

This alignment shapes every subsequent governance decision - from which domains to prioritize to which capabilities to invest in first. This balance isn't static - it shifts based on your evolving business context.

Step 2: Translating product vision into governance requirements

For product and technology teams, governance must support and enable product vision - not constrain it.

Ask these critical questions:

- What data capabilities do your products require?

- How does data quality impact user experience?

- What governance controls would users value as features?

- How could governance enable faster product development?

Map governance initiatives directly to product roadmaps. This creates natural alignment between governance teams and product teams.

Your industry context significantly shapes this alignment:

- Technology companies often lean toward offensive governance to drive rapid innovation

- Healthcare or financial organizations must balance innovation with strong defensive controls for regulations like HIPAA or BCBS 239

- Companies handling sensitive data (health information, financial records) need robust protective measures compared to those managing primarily public data

Consider how governance can:

- Improve data quality for customer-facing products

- Enable self-service access that accelerates development

- Provide compliance capabilities that become product differentiators

- Create documentation that supports both internal and external users

When governance is viewed as a product enabler rather than a development bottleneck, adoption increases dramatically. Data contracts between producers and consumers can formalize these relationships, establishing clear expectations for quality, availability, and appropriate use.

Step 3: Conducting a governance maturity assessment

You can't map your journey without knowing your starting point.

A modern governance maturity assessment should evaluate your capabilities across the key dimensions of your governance framework, recognizing that federated, domain-driven approaches are often more effective than centralized control.

For each dimension, here’s how to read the leveling:

- Level 1: No (Issues are dealt with as they appear)

- Level 2: Beginning (Importance recognized, initial efforts underway)

- Level 3: In Progress (Policies being created, people being appointed)

- Level 4: Yes (Organization enforcing policies with implemented solutions)

- Level 5: Optimized (Solution working with only minor improvements needed)

Let's create a assessment list that aligns with the dimensions within the three layers of our framework:

Foundation Layer Assessment

- Strategic Alignment

- Level 1: No explicit connection between data and business strategy

- Level 2: Basic recognition of data's strategic importance

- Level 3: Governance priorities explicitly linked to business objectives

- Level 4: Governance initiatives integrated into strategic planning

- Level 5: Data governance embedded in business strategy formulation

- Architecture & Technology Integration

- Level 1: Governance separate from technology decisions

- Level 2: Basic technology enablement of governance

- Level 3: Domain-oriented architecture supporting distributed governance

- Level 4: Integrated tooling across domains with common standards

- Level 5: Federated governance capabilities embedded in technology platforms

Offensive Governance Assessment

- Value Creation Capabilities

- Level 1: No formal processes for data-driven value creation

- Level 2: Basic quality initiatives in select domains

- Level 3: Domain-specific data quality frameworks with clear ownership

- Level 4: Proactive quality management across domains

- Level 5: Embedded quality automation driving business outcomes

- Data Democratization

- Level 1: Access restricted and knowledge siloed

- Level 2: Basic documentation within domains

- Level 3: Domain-specific discovery mechanisms

- Level 4: Cross-domain discovery with federated access

- Level 5: Seamless access appropriate to user context with embedded governance

- Operational Excellence

- Level 1: Ad-hoc governance operations

- Level 2: Basic operational processes established

- Level 3: Domain-specific governance operations with performance metrics

- Level 4: Continuous improvement processes across domains

- Level 5: Self-optimizing governance operations integrated into workflows

Defensive Governance Assessment

- Compliance Capabilities

- Level 1: Reactive compliance approach

- Level 2: Basic compliance policies defined

- Level 3: Domain-specific compliance controls with clear accountability

- Level 4: Integrated compliance across domains with monitoring

- Level 5: Adaptive compliance framework that evolves with regulations

- Security Implementation

- Level 1: Basic security controls only

- Level 2: Security policies with minimal connection to governance

- Level 3: Domain-appropriate security controls with governance alignment

- Level 4: Integrated security and governance framework

- Level 5: Context-aware security embedded in data access and usage

- Risk Management

- Level 1: Undefined risk assessment

- Level 2: Basic risk identification

- Level 3: Domain-specific risk management practices

- Level 4: Cross-domain risk visibility and mitigation

- Level 5: Integrated risk management enabling appropriate risk-taking

Assess your current state across these dimensions, remembering that:

- Not all dimensions need equal maturity - Prioritize based on your business context

- Domain-specific variation is expected - Different business domains may appropriately mature at different rates

- The goal isn't centralization - Higher maturity means better alignment and integration, not increased control

- Value creation should guide priorities - Focus first on dimensions that deliver tangible business value

Remember that not every organization needs to reach level 5 in every dimension. The appropriate maturity targets depend on your specific context:

- A startup might prioritize Strategic Alignment (level 3) and Value Creation (level 3) while maintaining only basic Compliance (level 2) and Security (level 2) capabilities. This allows them to focus resources on growth while managing essential risks.

- A regulated enterprise like a bank or healthcare organization might need advanced Compliance (level 4-5) and Security (level 4) capabilities, while still developing their Data Democratization (level 3) approach as they balance access with protection.

- A tech company might excel at Data Democratization (level 4-5) and Architecture Integration (level 4) while maintaining moderate Risk Management (level 3) appropriate to their data sensitivity.

This context-aware approach ensures you invest in the right capabilities at the right time, avoiding both under-governance (too much risk) and over-governance (too many restrictions) while enabling domain-specific ownership that aligns with your organizational structure.

Step 4: Choosing the right architectural approach for your context

Your governance architecture - centralized, federated, or hybrid - must match your organizational reality.

- Centralized governance works for smaller organizations or those with strict regulatory requirements

- Federated governance fits organizations with strong domain ownership and distributed decision-making

- Decentralized governance represents fully distributed approaches where domains operate with high autonomy and minimal central coordination

- Hybrid approaches combine central oversight for critical policies with domain-level implementation

Modern approaches to data governance are increasingly recognizing the value of domain-driven, decentralized architectures for managing enterprise data. Two of my favorite architectures to manage enterprise data are Data Mesh and the Meta Grid.

Data Mesh Architecture

Data Mesh applies domain-driven design principles to data governance by recognizing a fundamental truth: the teams closest to business domains should govern their own data while adhering to organization-wide standards.

In a Data Mesh:

- Domain teams own both operational processes and data quality

- Each domain provides data in standardized, consumable formats

- Governance standards apply consistently across domains while accommodating domain-specific needs

- Common infrastructure enables this distributed but connected approach

A central platform team provides infrastructure and governance frameworks, while domain teams maintain autonomy over their data assets.

Meta Grid Architecture

For organizations struggling with proliferating metadata repositories, the Meta Grid offers a decentralized approach to metadata management. This architecture acknowledges that:

- Metadata is stored in multiple repositories that tend to become siloed

- Each repository represents a different perspective on the enterprise IT landscape

- These repositories are rarely connected, creating confusion and redundant work

The Meta Grid typically organizes metadata repositories into domains like:

- IT Management (EAM, ITSM, CMDB, etc.)

- Data Management (data catalogs, data quality tools, data warehouse, AIM, etc.)

- Information Management (BPMS, PIMS, ISMS, RIMS)

- Knowledge Management (CMS, KMS, LMS, QMS, CMSy)

The right architecture depends on your:

- Organizational structure

- Data landscape complexity

- Regulatory environment

- Existing decision-making culture

- Available resources

- Domain boundaries clarity

Be honest about how decisions are actually made in your organization. Governance models that fight your culture will fail, regardless of their theoretical merit.

Value Creation: Enforce Business Rules through Data Quality and Observability

Governance without value creation is just bureaucracy.

The offensive elements of your governance program generate tangible benefits that justify investment and drive adoption. They transform governance from a cost center to a value creator.

Step 1: Establishing strong data quality foundations

Poor data quality erodes trust and undermines analytics initiatives. Ultimately, it slows down decision-making.

How does ensuring data quality drive offensive governance?

Data quality is the foundation of offensive governance because it enables trustworthy insights that drive decision-making. Without it, your "offensive" capabilities collapse under the weight of uncertainty.

Data quality implementation steps that work, in descending order of impact:

- Start with business impact, not generic metrics: Begin by identifying which data elements directly affect critical business outcomes, then build quality rules specifically for those elements. For example, a retail organization should first focus on improving product inventory accuracy because it directly impacts both customer satisfaction and revenue, rather than starting with general completeness metrics across all datasets. This targeted approach ensures quality efforts create immediate, visible business value.

- Implement tiered quality requirements aligned with medallion architecture: The Bronze-Silver-Gold pattern of medallion architecture provides a natural framework for implementing tiered quality requirements:

- Gold layer (Tier 1/Critical): Highly curated business-ready data like customer identification and financial records (99.9%+ accuracy) - enforces strict business rules and quality controls

- Silver layer (Tier 2/Important): Cleansed and conformed data for operational systems and product information (97%+ accuracy) - applies standardization, deduplication, and domain validation

- Bronze layer (Tier 3/Standard): Raw ingested data for exploratory analytics and trend analysis (90%+ accuracy) - captures data as-is with minimal validation

- This tiered approach enables different business functions to access the appropriate quality level for their needs. Analytics teams can quickly access Bronze layer data for early insights, while financial reporting would only use Gold layer data that has passed through the complete quality pipeline. The medallion architecture provides both the conceptual framework and technical implementation pattern to support this progressive quality approach.

- Create domain-specific quality rules: Generic rules rarely work across domains. Marketing data quality might emphasize completeness of campaign attributes, while supply chain data might prioritize accuracy of inventory counts.

- Automate quality workflow: Manual quality checks don't scale. A thoughtfully designed quality pipeline could flag issues, route them to the right data steward, track resolution time, and provide analytics on common issues.

Quality initiatives fail when they attempt to boil the ocean. Define concrete, achievable goals that deliver visible business impact. Start small. Focus on the 20% of data assets that drive 80% of business value. For example, a manufacturing company might start with just product quality data, where improvements could directly reduce warranty claims.

QUICK WIN: Implement basic data quality rules for one critical dataset. Focus on completeness and accuracy of the 5-7 most important fields. Even this limited implementation can significantly improve decision quality.

Step 2: Implementing effective data observability

You can't govern what you can't see.

How does data observability drive offensive governance?

Observability transforms governance from reactive to proactive. Instead of discovering problems after they've affected decisions, you can identify issues at the source and address them before impact spreads.

Data observability provides visibility into your data's health, usage, and lineage. It creates the foundation for proactive governance by answering:

- Is data arriving on time and complete?

- Are quality metrics within acceptable ranges?

- Who is using which data assets and how?

- Where did this data originate and how was it transformed?

- When and why did data anomalies occur?

Data observability implementation steps that work, in descending order of impact:

- Monitor data at key transition points: Ingestion, transformation, and consumption are critical junctions to monitor. For example, a financial services firm would typically prioritize implementing validation checks at each ETL stage, dramatically reducing issues that reach downstream applications.

- Implement domain-specific anomaly detection: Generic anomaly detection often generates false positives. Domain-specific rules (like identifying when customer churn suddenly exceeds 3 standard deviations from norm in a specific region) can provide more actionable signals.

- Create observability dashboards for different personas: Technical teams need different signals than business users. A well-designed observability implementation provides both technical metrics (freshness, volume, schema changes) and business metrics (completeness of customer profiles, accuracy of forecasts).

- Create lineage for high-value data flows: Complete lineage for everything is overwhelming. So, start with your most critical data flows. A healthcare provider might focus on patient data lineage first, as errors there have the highest operational and compliance impact.

Observability produces continuous signals that guide governance priorities and interventions. When implemented thoughtfully, it creates a feedback loop that continuously improves data quality.

Step 3: Defining and enforcing business rules and data contracts

Business rules and data contracts formalize expectations between data producers and consumers.

Business rules are the governing principles that define how data should be structured, validated, and used within your organization. They include:

- Validation rules that enforce data integrity

- Transformation logic that standardizes data formats

- Decision criteria that determine how data is processed or classified

- Relationships between different data elements

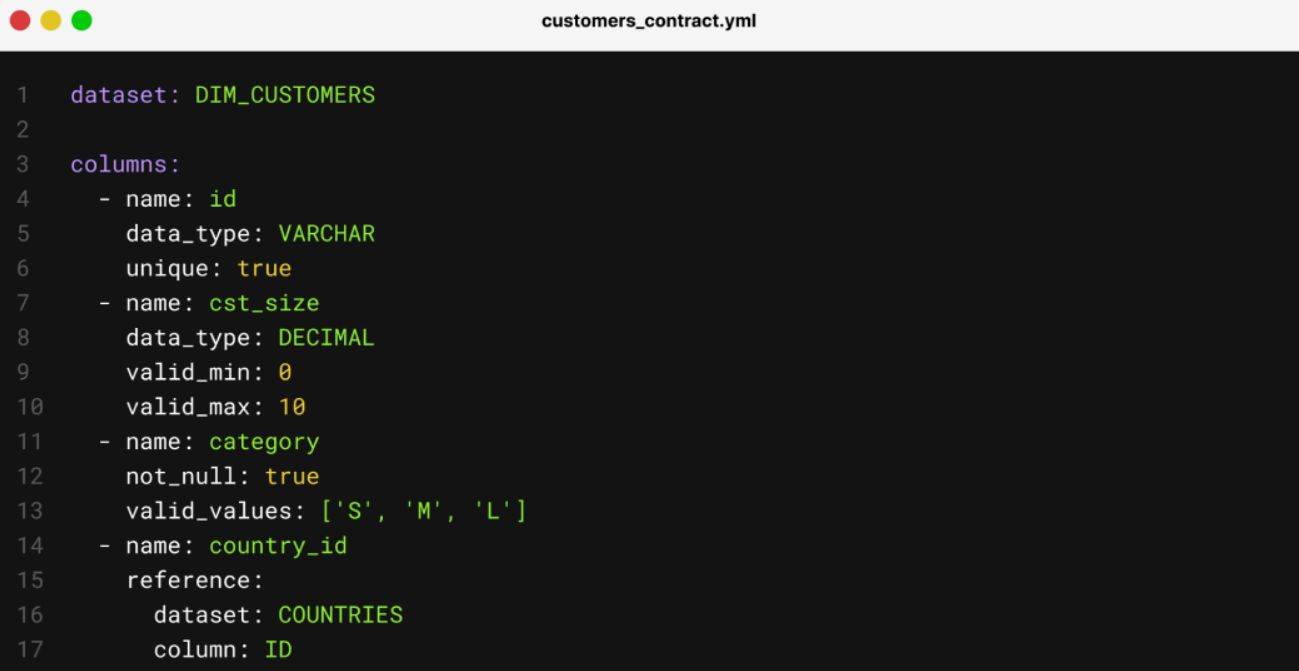

Data contracts are formal specifications that define:

- What data will be provided and in what form

- Expected quality levels and service standards

- How data will be used and by whom

- Responsibilities of both data producers and consumers

These contracts serve as the bridge between governance principles and technical implementation, translating high-level policies into specific requirements:

- Format rules: Standardize how data is structured and presented. For example, a Social Security Number might be specified as XXX-XX-XXXX with validation for the correct number of digits and appropriate hyphenation.

- Integrity rules: Ensure logical relationships within the data remain valid. A sales system could specify that order dates must precede shipping dates, with a maximum allowable lag between the two.

- Domain rules: Define acceptable values within a field. For SSNs, this might include validation that the first three digits are valid area numbers according to SSA guidelines, or that test values (like 999-99-9999) are properly flagged.

- KPIs and SLAs: Establish measurable expectations for data delivery. A marketing data contract might specify 99.5% completeness for campaign attribution data with no more than 4-hour latency.

Data contracts differ from data SLAs in an important way: contracts define what's being delivered, while SLAs measure how well it's delivered. Think of ordering a meal - the contract specifies the ingredients and preparation (what you get), while the SLA guarantees delivery time and temperature (how well you get it).

Always start with critical data interfaces –> the points where data crosses team or system boundaries. Document existing expectations before attempting to improve them. Use contracts to make implicit assumptions explicit.

A retail company implementing a customer 360 initiative could begin with contracts between the e-commerce team (providing online purchase data) and the customer insights team (consuming the integrated view), clearly defining field mappings, refresh frequency, and quality thresholds.

QUICK WIN: Create a simple .txt Data Contract

A data contract doesn't need to be a long, complex JSON or YAML file with a lot of information and checks. Create a simple .txt document that includes just two essentials:

Metadata: contract owner, when it was last updated, purpose of data

Schema: expected structure (fields, types, relationships), nested objects

This will create immediate clarity for both data producers and consumers without requiring complex specifications. If you want to be slightly more comprehensive, you can add quality expectations (update frequency, completeness thresholds.)

A data contract should be easy to write. Easy to read. Easy to maintain.

Step 4: Implementing systematic data remediation

Data remediation goes beyond fixing individual issues - it's about systematically improving the data ecosystem to prevent similar problems from recurring.

Data remediation implementation steps that work, in descending order of impact:

- Map feedback loops to data sources: Connect remediation findings (root causes) back to data creators. A manufacturer discovering quality issues in supplier data might implement automated validation at the submission point rather than just cleaning the data downstream. A financial services firm might trace an incorrect customer classification back to ambiguous onboarding form design, leading to both immediate fixes and form redesigns.

- Use patterns of remediation to refine governance policies: Each issue becomes an opportunity to strengthen the governance framework. A retailer noticing repeated product categorization errors might develop a more comprehensive taxonomy and classification guidelines based on remediation patterns. Similarly, when a healthcare provider discovers inconsistent patient address formats, they could implement standardization rules at all data entry points, not just correct existing records.

- Build self-healing capabilities for known issue patterns: An e-commerce platform could develop automation to standardize address formats based on postal service guidelines without manual intervention.

Effective remediation programs transform data quality from reactive to proactive by systematically addressing root causes, not just symptoms. The most mature organizations make remediation a continuous improvement process that strengthens the entire data ecosystem.

How to measure and communicate value creation through governance?

What gets measured gets managed and funded.

Track and report on governance initiatives using metrics that matter to leadership:

- Time savings: A financial institution could measure reduced time to prepare regulatory reports - potentially cutting days of manual reconciliation work from each reporting cycle.

- Quality improvements: A healthcare provider might track the reduction in duplicate patient records, potentially improving care coordination and reducing unnecessary tests.

- Risk reduction: An energy company could quantify potential regulatory fines avoided through improved data controls and documentation.

- Efficiency gains: A manufacturer might measure the acceleration in product development cycles by providing faster, more reliable access to test data.

- Usage increases: A retailer could track increased adoption of governed data assets, showing how standardized, trusted data spreads throughout the organization.

You cannot go wrong with tracking these metrics in most cases. It's very important to link such metrics to business outcomes where possible.

Compare these two ways of reporting the same improvement:

- Technical metric: "Customer data completeness improved by 15%."

- Business outcome: "Our marketing team reduced wasted ad spend by 15% thanks to improved customer data quality."

The business outcome statement connects the technical improvement to a financial outcome that executives care about, making it far more compelling when seeking continued support for governance initiatives.

⭐ Case studies and resources worth exploring ⭐

- The Deloitte’s white paper on “Transformation towards a Data-driven Business” underscores the importance of a structured approach with linking data initiatives to business value

- Understanding "Data Debt" and how governance can help with damage control

- 10 mins video: How Data Debt Turns Into a Spaghetti Data Architecture

- Article: Four main categories of Data Debt

Offensive governance capabilities deliver quick wins that build momentum for your overall program. They demonstrate that governance is an enabler, not just a control function.

By focusing on these value-creating elements first, you create a virtuous cycle: value drives adoption, adoption improves implementation, and better implementation delivers more value.

Democratization: Making Data Work for Everyone

Locked-away data creates no value.

True governance doesn't restrict access - it enables appropriate access while maintaining control. Democratization makes data available to the right people, at the right time, with the right context.

Step 1: Build an intuitive data discovery experience

You can't use what you can't find. Discovery capabilities should connect users with the data they need with ease.

Bottomline, upfront. When implementing discovery:

- Focus on user experience - catalogs that feel like burdens won't be adopted

- Prioritize business metadata over technical metadata

- Build curation into everyday workflows, not separate processes

- Use automation to reduce documentation burden

Data discovery implementation steps that work, in descending order of impact:

- Implement Google-like search: crafting an intuitive semantic search interfaces that understands business context, not just exact matches is the way to go. A marketing team could search for "customer retention" and find relevant datasets even if they're technically named like "churn_analysis_metrics."

- Add context to metadata: Technical metadata alone isn't enough. Attach business context that helps users understand data's meaning, quality, lineage, and appropriate usage. When an analyst finds a customer dataset, they should immediately see who owns it, how fresh it is, and how it connects to other assets.

- Surface automated quality assessments with visual cues: A financial dashboard could display confidence scores for the datasets powering its insights, helping users trust what they're seeing. A marketing team should be able to instantly see if a prospect dataset is complete enough for campaign planning without manual validation.

- Show which datasets are most frequently used by similar roles: A product manager exploring customer feedback data should see which datasets their peers rely on most (relevance signals).

The difference between struggling teams and high-performing ones often comes down to discovery. Effective discovery reduces "time to insight" by eliminating the hunt for data. When a healthcare organization implemented semantic search, analysts who previously spent 40% of their time hunting for data could redirect that effort toward actual analysis.

Discovery capabilities should answer common questions like "Which customer dataset should I use for this analysis?" or "Who knows about this data field?"

QUICK WIN: Map data owners for your top 20% most-used data assets. Simply knowing who to contact about data issues dramatically improves governance efficiency without requiring complex systems.

Step 2: Create a functional and compliant data marketplace

A data marketplace takes discovery further by structuring the exchange of data assets.

Unlike traditional catalogs that simply inventory assets, the role of a data marketplace is to actively facilitate data exchange.

Data marketplace implementation steps that work, in descending order of impact:

- Combine datasets with documentation, quality metrics, and usage examples: Packaged data products are powerful. A customer dataset might include field definitions, update frequency, and sample analyses.

- Implement self-service access: Users should able to request access through streamlined processes with automated approvals for non-sensitive data. A business analyst shouldn't wait days for access to public customer segmentation data. Similarly, a product manager should be able to request access to usage data and receive automated approval within minutes rather than days.

- Build compliance checks into the marketplace itself: Embedded compliance ensuring sensitive data is appropriately protected. Automated PII detection can flag datasets requiring special handling without burdening data owners.

- Value signaling (user ratings/reviews): Track and display usage metrics, user ratings, and success stories to highlight high-value assets. Teams should be able to see that a particular customer dataset has powered five successful marketing campaigns.

Unlike external data marketplaces that focus on monetization, internal marketplaces democratize data while maintaining appropriate controls. They're about creating a trusted environment for data sharing, not selling data.

Organizations with functional marketplaces can dramatically accelerate time-to-insight. A retail company might enable merchandising teams to discover, understand, and access inventory patterns without IT involvement, allowing them to respond to trends within hours instead of weeks.

Step 3: Connect people with context

Data without context creates confusion, not insights.

When users find data, they need enough context to understand what it means, where it came from, and how to use it properly:

- Business glossaries: Establish consistent terminology across domains. "Active customer" should mean the same thing whether in marketing, sales, or product teams.

- Knowledge capture: Enable comments, discussions, and annotations on data assets to preserve organizational knowledge. When a finance team discovers an anomaly in quarterly data, their explanation should be permanently attached to that dataset.

- Data lineage visualization: Show how data flows through systems from source to consumption. A dashboard user should be able to trace metrics back to their origin, building confidence in the insights.

- Active metadata: Capture usage patterns, popular queries, and frequent joins to reveal how data is actually used. Teams should see which customer attributes are most commonly analyzed together.

Context turns raw data into usable information. A financial services firm could reduce reporting errors by 30% simply by connecting analysts with institutional knowledge about data's meaning and appropriate use.

Every piece of context added to a data asset multiplies its value to the organization.

QUICK WIN: Create a simple business glossary for your highest-priority data domain. Define 10-15 key terms that often cause confusion and publish them where everyone can access them. This immediately reduces miscommunication and improves data understanding at minimal cost.

Step 4: Break down silos while maintaining governance

Democratization must balance enablement with control.

Data locked in departmental silos prevents cross-functional insights, but uncontrolled access creates security and quality risks.

Balance enablement with appropriate control through:

- Domain-based access: Organize data around business domains rather than technical systems. Marketing teams should access customer data through familiar business concepts, not by navigating database structures.

- Progressive disclosure: Reveal appropriate levels of detail based on user role and expertise. Business users see curated views with business terms, while data engineers can access technical metadata when needed.

- Privacy compliant techniques: This goes back to the “Embedded Compliance” capability in a data marketplace we covered before briefly. It’s all about applying privacy-preserving transformations to sensitive data rather than blocking access entirely. Teams might access aggregated customer behavior patterns without seeing individual customer details.

- Access monitoring and analytics: Track how data is used to identify both gaps and risks. If customer service teams consistently request marketing data, it signals an opportunity to create cross-functional views.

- Data contracts and SLAs: Formalize expectations between data producers and consumers regarding quality, freshness, and availability. When product teams provide usage data to marketing, both sides should understand exactly what will be delivered, when, and at what quality level.

Data democratization is about striking the perfect balance between enablement and control. When done right, users don't experience governance as restriction but as the framework that makes confident data use possible.

Operational Excellence: Optimizing Regular Governance Operations

Governance that exists only in documents delivers no value.

Excellence in data governance means embedding it into daily operations so thoroughly that it becomes invisible - just how work gets done. This requires focused programs, clear metrics, and continuous improvement.

Step 1: Continuous improvement of data quality and data observability

Quality doesn't improve by accident—it requires structured programs:

- SLA management: Establish clear, measurable quality expectations for critical data domains. A customer data SLA might specify 99.5% completeness for required fields with no more than 4-hour detection of anomalies.

- Quality-driven process automation: Build quality checks into data pipelines. Marketing analytics pipelines could automatically quarantine campaign data with abnormal conversion patterns for review before it affects downstream reports.

- Quality improvement rituals: Create regular review cadences for quality metrics. Monthly quality reviews might reveal that customer address validation rates have slipped, triggering targeted improvement initiatives.

- Data pipeline optimization: Streamline data flows to improve quality and performance. A financial services firm might implement incremental validation checks throughout transformation processes rather than only at the endpoints.

- Modern data stack capabilities: Leverage observability features in modern tools. Cloud data platforms can provide real-time quality monitoring across the entire data lifecycle with minimal custom development.

The shift from reactive to proactive quality management can transform operational efficiency. Organizations that embed proactive quality management efforts into their operations could reduce data incidents by up to 80%.

A healthcare provider might move from reactive data firefighting to proactive quality management by implementing continuous monitoring across their patient data ecosystem.

When quality becomes embedded rather than enforced, teams spend more time on insights and less on fixes.

Step 2: Improving data democratization through initiatives that drive adoption

Democratization fails without intentional adoption efforts, and requires both tools and culture:

- Usage monitoring: Track how data is accessed and applied. A manufacturing company might discover that quality data is rarely used by operations teams, revealing an opportunity to create more relevant views.

- Continuous improvement and training (data literacy and democratization programs): Develop targeted training based on role needs. Executives might learn data interpretation fundamentals while analysts dive deeper into analytical techniques.

- Community outreach: Build networks of data champions across departments. Create a forum with dedicated time for guidance and questions where team members can discuss data quality concerns with governance experts. Communicate governance updates on a recurring basis (decide the frequency that makes the most sense for your organization) that highlights achievements and shares learnings from successful data-driven projects, creating peer learning opportunities.

- Platform enhancements: Continuously improve discovery and access tools. User feedback mechanisms could reveal that business users struggle to find relevant data assets, prompting improvements to search functionality.

- Adoption incentives: Reward behaviors that support governance goals. Recognition programs might highlight teams that contribute high-quality documentation or improve data quality.

The transition from "data locked away" to "data democratized with guardrails" can transform decision velocity. A retail organization might reduce time-to-insight from weeks to days by combining appropriate access with just-in-time support. By focusing on specific user needs, a financial services company could increase data usage by making governed data easier to access than ungoverned alternatives.

The most successful organizations make democratization practical, not theoretical.

Step 3: Setting up critical cross-functional activities to maintain operational excellence

Consistency requires cross-functional alignment:

- Cross-system data consistency: Establish mechanisms to ensure data means the same thing everywhere. A retail organization could implement master data management to ensure product classifications remain consistent across inventory, e-commerce, and analytics systems.

- Stakeholder collaboration forums: Create structured opportunities for data stakeholders to align. Monthly data stewardship councils might bring together representatives from marketing, sales and finance to resolve conflicting definitions of "customer" or "active account."

- Change management: Implement formal processes for data changes that affect multiple teams. When modifying customer segmentation rules, a change management process could ensure all downstream reports and models are updated consistently.

As always, too much process is never good “Death by Process”. The idea is to have lightweight processes that establish clear governance roles and communication channels to facilitate productive conversations between key stakeholders to handle changes and maintain cross-system consistency.

Don't forget these critical success factors for achieving operational excellence:

Sustainable governance excellence requires attention to these factors, in descending order of importance:

- Executive sponsorship: Secure active leadership support. When executives regularly review data quality metrics alongside financial results, the organization gets the message.

- Well-defined ownership: Establish clear accountability for each governance domain. Everyone should know which team owns customer data quality and who to contact when issues arise.

- Risk and reward based prioritization: Apply governance proportional to data sensitivity and value. Customer financial data warrants stronger controls than website analytics.

- Integration with existing workflows: Embed governance into tools people already use. Data scientists shouldn't leave their development environment to access governance information.

- Process optimization: Streamline data and business processes based on governance insights. Order processing teams might discover through data quality monitoring that 30% of delays stem from inconsistent address formats, prompting upstream validation improvements.

Organizations that nail these factors can transform governance from overhead to advantage. A financial services firm might build data governance directly into their agile development process, allowing teams to move quickly while maintaining compliance.

Note: We'll explore measuring governance performance in depth in the fifth installment of this series.

Value Protection: Managing Risks and Enforcing Regulatory Compliance

We're now shifting gears to implementing defensive governance. While offensive governance focuses on value creation, data democratization, and operational excellence, defensive governance ensures value protection through regulatory compliance and effective risk management.

Note: For a comprehensive overview of defensive governance, refer to the second installment in this series: Balancing a Data Governance Strategy: The Defensive-Offensive Framework. In this section, we'll focus on how to implement it.

Mapping your regulatory landscape

Regulatory requirements continue to expand globally, creating a complex compliance terrain. You need to ensure you'll be set up to do ALL of the following:

- Document which regulations affect your data across jurisdictions: A global financial services company might maintain a living document mapping GDPR, CCPA, HIPAA, and industry-specific requirements to affected datasets.

- Systematically evaluate how regulations affect your data operations: New privacy regulations could trigger controls that affect how marketing teams use customer data for campaign targeting.

- Monitoring for regulation changes: Stay ahead of evolving requirements. Governance teams could establish a quarterly regulatory review process to assess how proposed legislation might impact data strategy.

- Actively converting legal language into practical policies: Rather than forcing teams to interpret complex regulations (as they arise), governance could provide clear guidelines on handling PII across different systems.

Organizations that proactively map their regulatory landscape can respond to changes with precision rather than panic. By understanding exactly which datasets and processes are affected by specific regulations, compliance becomes targeted rather than blanket restriction.

Risk management strategies that don't impede innovation

Effective risk management enables rather than blocks.

- Develop a consistent methodology for evaluating data risks: A standardized approach or a risk assessment framework could help teams quickly determine appropriate controls based on data sensitivity and usage context.

- Apply controls proportional to risk levels: Risk tiering = basics. Customer financial data might require strict access controls and encryption, while aggregated website analytics could have lighter protections.

- Create clear paths for appropriate risk-taking: When business value justifies controlled risk, formal risk acceptance processes allow innovation to proceed with eyes open.

- Risk mitigation through design: Build protection into data products from the start. Privacy-preserving techniques like differential privacy or data minimization could allow analytics teams to derive insights without exposing sensitive details.

Progressive organizations view risk management as an enabler of innovation. By establishing clear guardrails, a healthcare organization could accelerate research initiatives while maintaining patient data protection through appropriate anonymization techniques.

Implementing security controls within governance

Security implementation should align with your governance maturity.

- Define who can access what data based on your governance risk model: A governance framework might establish standard access patterns that can be implemented through role-based or attribute-based controls depending on organizational maturity.

- Connect data policies directly to technical controls: Data classification standards could automatically trigger appropriate protection measures like masking or encryption based on sensitivity levels.

- Focus security monitoring on governance priorities: Teams could implement targeted monitoring for high-value data domains first, progressively expanding coverage as governance matures.

- Build verification mechanisms that scale with your data footprint: Automated monitoring could verify that security controls remain aligned with governance policies even as your data landscape grows.

The right implementation approach depends on your governance maturity. Early-stage programs might start with basic role-based security while more mature organizations could implement context-aware controls. The key is ensuring security implementation directly supports governance objectives rather than operating in isolation.

Compliance tactics that scale

Compliance must grow with your data ecosystem. Here are four ways you can scale your compliance efforts:

- Ensure compliance by design: Build requirements into processes and systems from the start. Data ingestion pipelines could automatically classify sensitive data and apply appropriate retention policies.

- Capture compliance proof as part of regular operations: Access logs, change management records, and data lineage could serve as continuous evidence of controls rather than point-in-time audits.

- Build self-service compliance tools: Enable teams to measure their own compliance posture. Pre-built templates and checklists could help product teams evaluate whether new features meet regulatory requirements.

- Coordinate compliance education: Build awareness through targeted training and resources. Role-specific guidance could help data scientists understand exactly how privacy regulations affect model development without overwhelming them with legal details.

Organizations that scale compliance effectively focus on embedding it into everyday work. By integrating compliance checks into CI/CD pipelines, a software company could ensure that data handling standards are verified with each release rather than through disruptive audit cycles.

The most successful organizations view defensive governance not as a constraint but as a foundation for trust. When data is properly protected, teams can innovate with confidence rather than caution.

Building the Tech Foundation: Choosing the Right Toolkit for Governance

Technology enables scale in governance implementation. The right tools support both offensive and defensive objectives when properly integrated.

Management platform considerations

A central governance platform serves as the foundation:

- Management capabilities: Look for encryption, configuration, controls, and reporting features. The platform should provide a single control plane that connects policies to implementation.

- Platform vs. point solutions: Consider whether an integrated platform or specialized tools better fits your maturity level. Early-stage programs might start with focused tools while enterprise-scale initiatives often require integrated platforms.

Key platforms include Collibra (enterprise-wide governance), Alation (catalog-centric governance), and Atlan (modern active metadata platform). The right choice depends on your organizational culture and existing tech stack as much as features.

Tool selection for each governance domain

Different governance functions require specialized capabilities:

- Data quality and observability tools: Platforms like Monte Carlo and Telm.ai support offensive governance through data quality monitoring and data observability. They detect quality issues before they impact business decisions.

- Data catalog/lineage: Solutions like Atlan, Alation, Collibra and Zeenea enable both offensive (data discovery, collaboration) and defensive (documentation, lineage) objectives. Modern catalogs serve as the connective tissue between governance domains.

- Master and reference data management: Solutions from Reltio, Informatica, and Semarchy maintain consistent data definitions across systems, primarily supporting defensive consistency.

- Compliance monitoring: Specialized tools from OneTrust, BigID, and Securiti automate defensive regulatory controls but can also enable offensive use by clarifying what's permissible.

- Data security tools: Solutions like Zscaler, Okta, Immuta, and Privacera implement fine-grained access control, balancing defensive protection with offensive enablement.

Popular data platforms like Snowflake and Databricks now include native governance capabilities, allowing governance to be embedded directly in data infrastructure.

Integration strategies for your existing tech landscape

Successful implementation requires integration:

- API-first integration: Prioritize tools with robust APIs that connect to your existing stack. Modern governance can't operate in isolation.

- Workflow integration: Look for tools that embed into users' daily workflows. Governance that requires context switching sees lower adoption.

- Metadata synchronization: Ensure governance tools can share metadata to prevent silos. The ideal state is unified metadata flowing between systems.

- Automation capabilities: Evaluate each tool's ability to automate routine governance tasks. Manual governance doesn't scale.

Build vs. buy decisions in governance tooling

Not every governance capability requires commercial tools:

- Core vs. context: Build custom solutions only for truly differentiating capabilities. Use commercial tools for standard governance functions.

- Maturity considerations: Your governance maturity should influence build/buy decisions. Early-stage programs often benefit from commercial tools that embed best practices.

- Integration costs: Factor in the true cost of integrating any solution. Sometimes the "best" tool is the one that connects most seamlessly to your ecosystem.

- AI governance considerations: As AI capabilities grow, specialized tools for ML governance are emerging. These connect model development to broader data governance.

Most successful implementations use a mix of commercial platforms, open-source tools, and targeted custom capabilities integrated through a cohesive metadata layer.

The technology decisions you make should directly support your governance strategy - offensive tools that accelerate appropriate data use and defensive tools that protect against inappropriate use, working in harmony to create a trusted data environment.

Implementation: Taking First Steps into Action

Frameworks become valuable only through implementation. This section focuses on the immediate tactical actions to begin your governance journey over the next 3-6 months. We'll explore how to select your starting point, implement essential capabilities in your priority domains, and expand from initial successes to pilot projects. Think of this as your guide to getting governance off the ground and demonstrating early value.

Effective data governance doesn't require implementing everything at once. “Effective” is contextual and always a moving target!

Minimum Viable Governance

If you’re building a data foundation, you CANNOT go wrong with Minimum Viable Governance. Period

Start with these essential elements:

- Clear ownership: Define who's responsible for key data domains

- Basic quality standards: Establish minimal acceptable quality for critical data

- Simple discovery: Create a central inventory of important data assets

- Essential policies: Document how sensitive data should be handled

- Access management: Ensure appropriate controls for sensitive information

This foundation delivers immediate value while setting the stage for more advanced capabilities as your organization matures.

However, if your maturity assessment results are indicating that you need to evolve your data governance efforts then implementing the modern data governance framework becomes valuable only through thoughtful implementation. Here's how to transform concepts into action.

Domain Prioritization: Starting with high-value, high-risk domains

Begin where you can demonstrate tangible value:

- Domain prioritization: Assess domains based on business value and risk exposure. Customer data typically ranks high on both dimensions, making it a common starting point.

- Quick wins: Identify opportunities for rapid improvement. Implementing basic data quality rules for a critical marketing dataset could immediately reduce campaign errors.

- Executive alignment: Secure leadership support for your initial focus areas. When executives understand why you're starting with specific domains, they become allies in implementation.

A healthcare organization might begin with patient data—high value for improving care and high risk for compliance—before expanding to operational domains.

After selecting your priority domains using the criteria above, the following section provides specific implementation guidance based on your organization's context and needs.

Organization-specific Implementation Paths: Where to Begin

Your governance journey should start where you can deliver tangible value quickly. Here are specific starting points based on common organizational scenarios:

For Organizations Facing Regulatory Pressure:

- Map regulatory requirements to specific data domains

- Document current compliance controls

- Identify and address critical gaps

- Build governance around these essential controls

For Organizations Focused on Analytics:

- Implement basic quality for analytical data sources

- Create discovery capabilities for analytical assets

- Document data definitions and calculations

- Establish data product ownership

For Organizations Modernizing Legacy Systems:

- Map critical data flows across systems

- Document data translation patterns

- Establish quality checks at integration points

- Define data ownership across system boundaries

For Fast-Growing Organizations:

- Establish lightweight data ownership model

- Create basic documentation standards

- Implement essential access controls

- Build quality monitoring into new data pipelines

Remember that your starting point isn't your destination. Begin with focused efforts that address immediate needs, demonstrate value, and build momentum for broader governance initiatives.

Your First 90 Days in Action (30/60/90 Plan)

Define concrete outcomes for your initial implementation with 30/60/90-day plans.

Avoid the common trap of overly ambitious timelines. A retail organization planning a 3-month enterprise-wide catalog implementation might set themselves up for failure compared to a progressive 18-month rollout.

Here’s how a 30/60/90 days plan for your initial data governance implementation journey could look like:

First 30 days:

- Complete a governance maturity assessment

- Identify 1-2 high-priority domains

- Establish initial governance roles and responsibilities

- Define success metrics for your initial focus areas

Days 31-60:

- Implement basic quality monitoring for priority domains

- Create or enhance data discovery capabilities

- Develop essential policies and standards

- Build awareness through targeted communications

Days 61-90:

- Measure and communicate initial improvements

- Expand to 1-2 additional domains

- Enhance governance capabilities based on early feedback

- Develop a longer-term governance roadmap

The time to start is now. The path to governance success starts with a single step. By taking thoughtful, targeted action today, you build the foundation for data excellence that will serve your organization for years to come.

Building governance into data products

Embed governance as a product feature, not an afterthought:

- Include governance capabilities in product specifications: Data catalogs should support both discovery and lineage tracking by design.

- Design governance to enhance rather than hinder user workflows: Prioritize user experience. Self-service analytics platforms could incorporate quality indicators that help users select trustworthy data.

- Incorporate governance checks into development pipelines: Automated tests could verify that new datasets include required metadata.

The most successful implementations treat governance as a core product feature. A financial services firm could see higher adoption of governed data when quality metrics appear directly within analytics tools.

Scaling governance beyond pilot projects

Expansion requires systematic approaches:

- Reusable patterns: Create templates and playbooks from initial successes. Documentation from your customer data domain governance can streamline implementation for product data.

- Federated implementation: Enable domain teams to implement within a central framework. Subject matter experts in marketing might adapt governance standards to their specific needs while maintaining enterprise consistency.

- Progressive governance maturity: Implement capabilities matched to each domain's readiness. New domains might start with basic documentation while mature domains implement advanced quality monitoring.

- Governance communities: Build networks of practitioners across domains. Regular forums allow teams to share implementation approaches and lessons learned.

Scale happens through consistency, not control. A retail organization could accelerate adoption by creating a governance toolkit that domain teams adapt to their specific contexts.

These scaling principles provide the foundation for expanding your initial governance successes. In the next section, we’ll build on these concepts to develop a strategic roadmap for the future that ensures your governance program matures and scales effectively over multiple years.

The Governance Roadmap: Planning for Long-Term Success

Building effective data governance is a journey measured in years, not months. Once you've established your initial implementation, this section will guide your strategic planning for governance maturity over the next 1-3 years.

We'll explore how to secure resources, anticipate organizational changes, avoid common scaling pitfalls, and create a phased approach that grows your governance capabilities in alignment with business priorities.

Start Small, Think Big, Scale Smart

Remember our governance approach SSTBSS (Start Small, Think Big, Scale Smart) with this phrase:

Sassy Squirrels Throw Banana Skins Sideways 😉

The most successful governance programs follow this pattern:

- Start small: Focus on one high-impact domain where improved governance will deliver visible value.

- Think big: Design your initial implementation with scalability in mind, using patterns that can extend to other domains.

- Scale smart: Expand methodically based on business priorities and demonstrated success, not arbitrary timelines.

Remember that governance maturity builds over time. Each successful domain implementation creates momentum and provides lessons that accelerate subsequent efforts.

Resource planning and stakeholder alignment

Secure the people, budget, and executive support you need:

- Team structure: Determine the right mix of central and federated governance roles. A hub-and-spoke model with central coordination and domain ownership often balances consistency and domain expertise.

- Skill development: Identify capability gaps and training needs. New data stewards may need structured onboarding and mentoring from experienced practitioners.

- Budget allocation: Secure appropriate funding for tools, training, and staff. Technology typically represents only 30-40% of governance costs, with people and process development requiring the majority.

- Executive sponsorship: Maintain active leadership engagement throughout implementation. Quarterly governance reviews with executive sponsors can maintain momentum and address emerging obstacles.

The most common implementation failure is inadequate resourcing. A manufacturing company might struggle with adoption when relying on part-time data stewards without proper training or dedicated time.

Evolving your governance approach with industry trends

Build adaptability into your governance program:

- Monitor how AI, automation, and cloud platforms reshape governance possibilities. Active metadata platforms could automate previously manual governance tasks.

- Track evolving compliance requirements that affect your domains. New privacy regulations might necessitate adjustments to your data handling practices.

- Learn from peer organizations and governance communities. Industry forums can provide early insights into effective approaches and common pitfalls.

- Adapt governance to shifts in business strategy or structure. Mergers or new digital initiatives might require rapid governance expansion to new domains.

Static governance programs rapidly become obsolete. A financial services firm regularly reassessing its governance roadmap against industry developments could maintain compliance and competitive advantage despite regulatory changes.

Common pitfalls to avoid

Watch for these frequent implementation challenges:

- Perfectionism over progress: Seeking a perfect governance program often results in no program at all

- Tools without process: Investing in technology without addressing people and processes

- Lack of ownership: Failing to establish clear accountability for governance domains

- Ignoring cultural factors: Underestimating the change management aspects of governance

- Skipping the foundations: Rushing to advanced capabilities before establishing basics

Setting realistic long-term goals

Structure your roadmap around achievable longer-term milestones:

- Quarterly horizons: Set measurable objectives for 3-6 month periods. Within two quarters, you could target implementation of data quality monitoring for two high-priority domains.

- Annual strategic goals: Establish broader outcomes for 1-2 year timeframes. Within 18 months, you might aim for all critical data domains to reach level 3 maturity.

- Long-term vision alignment (2+ years): Outline how governance maturity enables future business capabilities while considering how emerging technologies might reshape your governance approach. For example: "Our 3-year goal is to enable fully domain-driven data operations with federated governance that supports both innovation and compliance"

The frameworks and approaches we've explored provide a map, but your organization's journey will be unique. Adapt these ideas to your specific context, learn continuously, and remember that governance implementation is ultimately about enabling people to work more effectively with data.

Effective governance is as much about people and alignment as it is about processes and technology. Begin small, learn continuously, and let value creation guide your journey.

Member discussion